Cellular and Molecular Neurobiology

Introduction to Neurons and Neuronal Networks

John H. Byrne, Ph.D., Department of Neurobiology and Anatomy, McGovern Medical School

The three pounds of jelly-like material found within our skulls is the most complex machine on Earth and perhaps the universe. Its phenomenal features would not be possible without the hundreds of billions of neurons that make it up, and, importantly, the connections between those neurons. Fortunately, much is known about the properties of individual neurons and simple neuronal networks, and aspects of complex neuronal networks are beginning to be unraveled. This chapter will begin with a discussion of the neuron, the elementary node or element of the brain, and then move to a discussion of the ways in which individual neurons communicate with each other. What makes the nervous system such a fantastic device and distinguishes the brain from other organs of the body is not that it has 100 billion neurons, but that nerve cells are capable of communicating with each other in such a highly structured manner as to form neuronal networks. To understand neural networks, it is necessary to understand the ways in which one neuron communicates with another through synaptic connections and the process called synaptic transmission. Synaptic transmission comes in two basic flavors: excitation and inhibition. Just a few interconnected neurons (a microcircuit) can perform sophisticated tasks such as mediate reflexes, process sensory information, generate locomotion and mediate learning and memory. More complex networks (macrocircuits) consist of multiple imbedded microcircuits. Macrocircuits mediate higher brain functions such as object recognition and cognition. So, multiple levels of networks are ubiquitous in the nervous system. Networks are also prevalent within neurons. These nanocircuits constitute the underlying biochemical machinery for mediating key neuronal properties such as learning and memory and the genesis of neuronal rhythmicity.

The Neuron

Basic morphological features of neurons.

The 100 billion neurons in the brain share a number of common features (Figure 1). Neurons are different from most other cells in the body in that they are polarized and have distinct morphological regions, each with specific functions. Dendrites are the region where one neuron receives connections from other neurons. The cell body or soma contains the nucleus and the other organelles necessary for cellular function. The axon is a key component of nerve cells over which information is transmitted from one part of the neuron (e.g., the cell body) to the terminal regions of the neuron. Axons can be rather long extending up to a meter or so in some human sensory and motor nerve cells. The synapse is the terminal region of the axon and it is here where one neuron forms a connection with another and conveys information through the process of synaptic transmission. The aqua-colored neuron in Figure 1 (click on “Neuron Connected to a Postsynaptic Neuron”) is referred to as the postsynaptic neuron. The tan-colored terminal to the left is consequently referred to as the presynaptic neuron. One neuron can receive contacts from many different neurons. Figure 1 (click on “Neuron Receiving Synaptic Input”) shows an example of three presynaptic neurons contacting the one tan-colored postsynaptic neuron, but it has been estimated that one neuron can receive contacts from up to 10,000 other cells. Consequently, the potential complexity of the networks is vast. Similarly, any one neuron can contact up to 10,000 postsynaptic cells. (Note that the tan-colored neuron that was presynaptic to the aqua-colored neuron is postsynaptic to the pink, green, and blue neurons. So most “presynaptic” neurons are “postsynaptic” to some other neuron(s).

Figure 1 (click on “The Synapse”) also shows an expanded view of the synapse. Note that the presynaptic cell is not directly connected to the postsynaptic cell. The two are separated by a gap known as the synaptic cleft. Therefore, to communicate with the postsynaptic cell, the presynaptic neuron needs to release a chemical messenger. That messenger is found within the neurotransmitter-containing vesicles (the blue dots represent the neurotransmitter). An action potential that invades the presynaptic terminal causes these vesicles to fuse with the inner surface of the presynaptic membrane and release their contents through a process called exocytosis. The released transmitter diffuses across the gap between the pre- and the postsynaptic cell and very rapidly reaches the postsynaptic side of the synapse where it binds to specialized receptors that “recognize” the transmitter. The binding to the receptors leads to a change in the permeability of ion channels in the membrane and in turn a change in the membrane potential of the postsynaptic neuron known as a postsynaptic synaptic potential (PSP). So signaling among neurons is associated with changes in the electrical properties of neurons. To understand neurons and neuronal circuits, it is necessary to understand the electrical properties of nerve cells.

|

Figure 1 |

Resting Potentials and Action Potentials

|

|

Figure 2 |

Resting potentials. Figure 2 shows an example of an idealized nerve cell. Placed in the extracellular medium is a microelectrode. A microelectrode is nothing more than a small piece of glass capillary tubing that is stretched under heat to produce a very fine tip, on the order of 1 micron in diameter. The microelectrode is filled with a conducting solution and then connected to a suitable recording device such as an oscilloscope or chart recorder. With the electrode outside the cell in the extracellular medium, zero potential is recorded because the extracellular medium is isopotential. If, however, the electrode penetrates the cell such that the tip of the electrode is now inside the cell, a sharp deflection is seen on the recording device. A potential of about -60 millivolts inside negative with respect to the outside is recorded. This potential is called the resting potential and is constant for indefinite periods of time in the absence of any stimulation. If the electrode is removed, a potential of zero is recorded again. Resting potentials are not just characteristics of nerve cells; all cells in the body have resting potentials. What distinguishes nerve cells and other excitable membranes (e.g., muscle cells) is that they are capable of changing their resting potential. In the case of nerve cells, for integrating information and transmitting information, whereas, in the case of muscle cells, for producing muscle contractions.

Action potentials. Figure 3 shows another sketch of an idealized neuron. This neuron has been impaled with one electrode to measure the resting potential and a second electrode called the stimulating electrode. The stimulating electrode is connected through a switch to a battery. If the battery is oriented such that the positive pole is connected to the switch, closing the switch will make the inside of the cell somewhat more positive depending upon the size of the battery. (Such a decrease in the polarized state of a membrane is called a depolarization.) Figure 3 is an animation in which the switch is repeatedly opened and closed and each time it is closed a larger battery is applied to the circuit. Initially, the switch closure produces only small depolarizations. However, the potentials become larger and eventually the depolarization is sufficiently large to trigger an action potential, also known as a spike or an impulse. The action potential is associated with a very rapid depolarization to achieve a peak value of about +40 mV in just 0.5 milliseconds (msec). The peak is followed by an equally rapid repolarization phase.

|

Figure 3 |

The voltage at which the depolarization becomes sufficient to trigger an action potential is called the threshold. If a larger battery is used to generate a suprathreshold depolarization, a single action potential is still generated and the amplitude of that action potential is the same as the action potential trigged by a just-threshold stimulus. The simple recording in Figure 3 illustrates two very important features of action potentials. First, they are elicited in an all-or-nothing fashion. Either an action potential is elicited with stimuli at or above threshold, or an action potential is not elicited. Second, action potentials are very brief events of only about several milliseconds in duration. Initiating an action potential is somewhat analogous to applying match to a fuse. A certain temperature is needed to ignite the fuse (i.e., the fuse has a threshold). A match that generates a greater amount of heat than the threshold temperature will not cause the fuse to burn any brighter or faster. Just as action potentials are elicited in an all-or-nothing fashion, they are also propagated in an all-or-nothing fashion. Once an action potential is initiated in one region of a neuron such as the cell body, that action potential will propagate along the axon (like a burning fuse) and ultimately invade the synapse where it can initiate the process of synaptic transmission.

In the example in Figure 3, only a single action potential was generated because the duration of each of the two suprathreshold stimuli was so brief that sufficient time was only available to initiate a single action potential (i.e., the stimulus ended before the action potential completed its depolarization-repolarization cycle). But, as shown in the animations of Figure 4, longer-duration stimuli can lead to the initiation of multiple action potentials, the frequency of which is dependent on the intensity of the stimulus. Therefore, it is evident that the nervous system encodes information not in terms of the changes in the amplitude of action potentials, but rather in their frequency. This is a very universal property. The greater the intensity of a mechanical stimulus to a touch receptor, the greater the number of action potentials; the greater the amount of stretch to a muscle stretch receptor, the greater the number of action potentials; the greater the intensity of a light, the greater the number of action potentials that is transmitted to the central nervous system. Similarly, in the motor system, the greater the number of action potentials in a motor neuron, the greater will be the contraction of the muscle that receives a synaptic connection from that motor neuron. Engineers call this type of information coding pulse frequency modulation.

|

Figure 4 |

Synaptic potentials and synaptic integration

Figure 5 illustrates three neurons. The one colored green will be referred to as an excitatory neuron for reasons that will become clear shortly. It makes a connection to the postsynaptic neuron colored blue. The traces below (press “Play”) illustrate the consequences of initiating an action potential in the green neuron. That action potential in the presynaptic neuron leads to a decrease in the membrane potential of the postsynaptic cell. The membrane potential changes from its resting value of about -60 millivolts to a more depolarized state. This potential is called an excitatory postsynaptic potential (EPSP). It is “excitatory” because it moves the membrane potential toward the threshold and it is “postsynaptic” because it is a potential recorded on the postsynaptic side of the synapse. Generally (and this is an important point), a single action potential in a presynaptic cell does not produce an EPSP large enough to reach threshold and trigger an action potential. But, if multiple action potentials are fired in the presynaptic cell, the corresponding multiple excitatory potentials can summate through a process called temporal summation to reach threshold and triggering an action potential. EPSPs can be viewed as a “go signal” to the postsynaptic neuron to transmit information through a network pathway.

|

Figure 5 |

The red-colored neuron in Figure 5 is referred to as an inhibitory neuron. Like the green neuron, it also makes a synaptic contact with the blue postsynaptic neuron. It also releases a chemical transmitter messenger, but the consequences of the transmitter from the blue cell binding to receptors on the postsynaptic cell is opposite to the consequences of the transmitter released by the green neuron. The consequence of action potential in the red presynaptic neuron is to produce an increase in the membrane potential of the blue postsynaptic neuron. The membrane potential is more negative than it was before (a hyperpolarization) and therefore the membrane potential is farther away from threshold. This type of potential is called an inhibitory postsynaptic potential (IPSP) because it tends to prevent the postsynaptic neuron from firing an action potential. This is a “stop signal” for the postsynaptic cell. So the green neuron says “go” and the red neuron says “stop”. Now what is the postsynaptic neuron to do?

Neurons are like adding machines. They are constantly adding up the excitatory and the inhibitory synaptic input in time (temporal summation) and over the area of the dendrites receiving synaptic contacts (spatial summation), and if that summation is at or above threshold they fire an action potential. If the sum is below threshold, no action potential is initiated. This is a process called synaptic integration and is illustrated in Figure 5. Initially, two action potentials in the green neuron produced summating EPSPs that fired an action potential in the blue neuron. But, if an IPSP from the inhibitory neuron occurs just before two action potentials in the excitatory neuron, the summation of the one IPSP and the two EPSPs is below threshold and no action potential is elicited in the postsynaptic cell. The inhibitory neuron (and inhibition in general) is a way of gating or regulating the ability of an excitatory signal to fire a postsynaptic cell.

Neuronal Networks

Micronetwork motifs

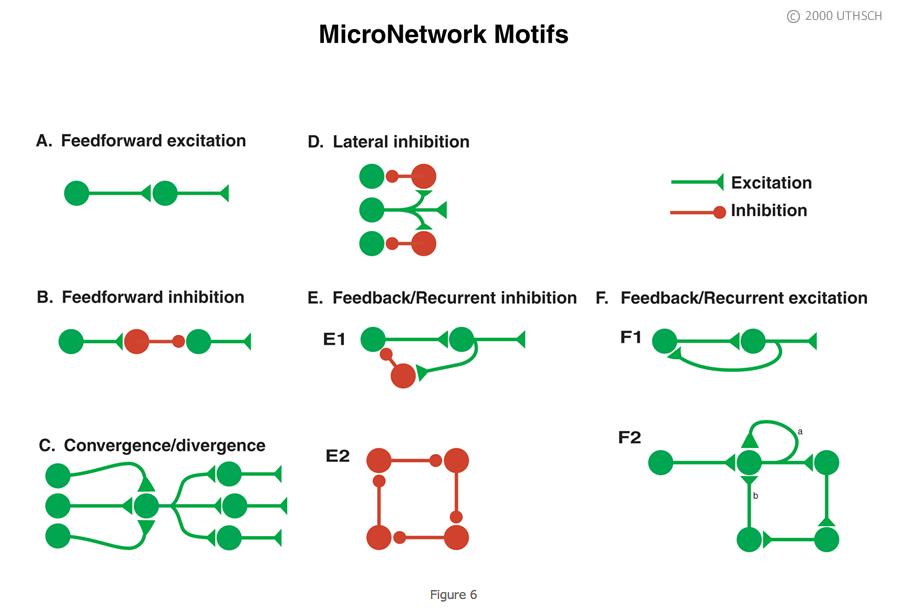

As indicated earlier in the chapter, a neuron can receive contacts from up to 10,000 presynaptic neurons, and, in turn, any one neuron can contact up to 10,000 postsynaptic neurons. The combinatorial possibility could give rise to enormously complex neuronal circuits or network topologies, which might be very difficult to understand. But despite the potential vast complexity, much can be learned about the functioning of neuronal circuits by examining the properties of a subset of simple circuit configurations. Figure 6 illustrates some of those microcircuit or micronetwork motifs. Although simple, they can do much of what needs to be done by a nervous system.

|

|

|

Feedforward excitation. Allows one neuron to relay information to its neighbor. Long chains of these can be used to propagate information through the nervous system.

Feedforward inhibition. A presynaptic cell excites an inhibitory interneuron (an interneuron is a neuron interposed between two neurons) and that inhibitory interneuron then inhibits the next follower cell. This is a way of shutting down or limiting excitation in a downstream neuron in a neural circuit.

Convergence/Divergence. One postsynaptic cell receives convergent input from a number of different presynaptic cells and any individual neuron can make divergent connections to many different postsynaptic cells. Divergence allows one neuron to communicate with many other neurons in a network. Convergence allows a neuron to receive input from many neurons in a network.

Lateral inhibition. A presynaptic cell excites inhibitory interneurons and they inhibit neighboring cells in the network. As described in detail later in the Chapter, this type of circuit can be used in sensory systems to provide edge enhancement.

Feedback/recurrent inhibition. In Panel E1, a presynaptic cell connects to a postsynaptic cell, and the postsynaptic cell in turn connects to an interneuron, which then inhibits the presynaptic cell. This circuit can limit excitation in a pathway. Some initial excitation would be shut off after the red interneuron becomes active. In Panel E2, each neuron in the closed chain inhibits the neuron to which it is connected. This circuit would appear to do nothing, but, as will be seen later in the Chapter, it can lead to the generation of complex patterns of spike activity.

Feedback/recurrent excitation. In Panel F1, a presynaptic neuron excites a postsynaptic neuron and that postsynaptic neuron excites the presynaptic neuron. This type of circuit can serve a switch-like function because once the presynaptic cell is activated that activation could be perpetuated. Activation of the presynaptic neuron could switch this network on and it could stay on. Panel F2 shows variants of feedback excitation in which a presynaptic neuron excites a postsynaptic neuron that can feedback to excite itself (a, an autapse) or other neurons which ultimately feedback (b) to itself.

These simple motifs are ubiquitous components of many neural circuits. Let’s examine some examples of what these networks can do.

Feedforward excitation and feedforward inhibition

One of the best understood microcircuits is the circuit that mediates simple reflex behaviors. Figure 7 illustrates the circuit for the so-called knee jerk or stretch reflex. A neurologist strikes the knee with a rubber tapper, which elicits an extension of the leg. This test is used as a simple way to examine the integrity of some of the sensory and motor pathways in the spinal cord. The tap of hammer stretches the muscle and leads to the initiation of action potentials in sensory neurons within the muscle that are sensitive to stretch. (The action potentials are represented by the small bright “lights” in the animation.) The action potentials are initiated in an all-or-nothing fashion and propagate into the spinal cord where the axon splits (bifurcates) into two branches.

|

Figure 7 |

Let’s first discuss the branch to the left that forms a synaptic connection (green triangle) with an Extensor (E) motor neuron (colored blue). The action potential in the sensory neuron invades the synaptic terminal of the sensory neuron causing the release of transmitter and subsequent excitation of the motor neuron. The stretch to the muscle leads to an action potential in the motor neuron (MN), which then propagates out the peripheral nerve to invade the synapse at the muscle, causing the release of transmitter and an action potential in the muscle. The action potential in the muscle cell leads to a contraction of the muscle and an extension of the limb. So, here we have a simple feedforward excitation circuit that mediates a behavior.

Now let’s examine the right branch of the axon of the sensory neuron of Figure 7. The action potential in the sensory neuron invades the synaptic terminal of the sensory neuron causing the release of transmitter, and subsequent excitation of the postsynaptic interneuron colored black. This neuron is called an interneuron because it is interposed between one neuron (here the SN) and another neuron (here the MN). The excitation of the interneuron leads to the initiation of an action and the subsequent release of transmitter from the presynaptic terminal of the interneuron (black triangle), but for this branch of the circuit, the transmitter leads to an IPSP in the postsynaptic flexor (F) motor neuron (colored red). The functional consequences of this feedforward inhibition it is to decrease the probability of the flexor motor neuron becoming active and producing an inappropriate flexion of the leg.

Convergence and divergence

The simplified circuit mediating the stretch reflex is summarized in Figure 8. However, the proper function of the circuit of the stretch reflex also relies on convergence and divergence. A single sensory has multiple branches that diverge and make synaptic connections with many individual motor neurons (click on “Divergence”). Therefore, when the muscle contracts as a result of the neurologist’s tapper, it does so because multiple muscle fibers are activated simultaneously by multiple motor neurons. Also, when the muscle is stretched, not one, but multiple sensory neuron are activated and these sensory neurons all project into the spinal cord where they converge on to individual extensor motor neurons (click on “Convergence”). So, the stretch reflex is due to the combined effects of the activation of multiple sensory neurons and extensor motor neurons.

|

Figure 8 |

|

|

Figure 9 |

Edge enhancement. Lateral inhibition is very important for processing sensory information. One example is a phenomenon in the visual system called edge enhancement. Figure 9 illustrates two bands, a dark gray band on the left, and a light gray band on the right. Although the dark band and the light band are of uniform luminance throughout each field, a close examination reveals that the light gray band appears somewhat lighter at the border of the dark gray band than it is in the other regions of the field. In contrast, the dark gray band appears somewhat darker at the border than at other regions of the dark field. This is a phenomenon of edge enhancement, which helps the visual system to extract important information from visual scenes. Edge enhancement is mediated, at least in part by lateral inhibition in the retina.

Let’s first consider a circuit without lateral inhibition (Figure 10, click on “Without Lateral Inhibition”). Light falls on the retina (Part A) and the intensity can be described by the step-like gradient (Part B). As a simplification, assume that the dark gray region has an intensity of five units and the light gray region has an intensity of ten units. The gradient of light activates the photoreceptors and the photoreceptors make synaptic connections to second-order neurons. Assume that the light intensity of 5 units leads to 5 spikes/s and the light intensity of 10 units leads to 10 spikes/s (Part C) in the photo receptors, and that the synaptic strength is sufficient (here indicated as +1) so that the light intensity of 5 units leads to 5 spikes/s and the light intensity of 10 units leads to 10 spikes/s (Part C) respectively in the second-order neurons. If no further processing of the information occurred, the gradient that is perceived would be exactly the same as the gradient of the light intensity (Part B, red trace). But that is not what is perceived and lateral inhibition explains the difference.

|

Figure 10 |

Now consider the expanded circuit with lateral inhibition (click on “With Lateral Inhibition”). Each one of the photoreceptors makes inhibitory synaptic connections with its neighboring second-order neuron. The strength of the inhibition (denoted by the -0.2) is less than the strength of the excitation (donated by the +1). Before looking at the border, consider the output of the circuit at the uniform areas of the each field. Far to the right side of the border all of the cells are receiving the same excitation and the same inhibition. Without lateral inhibition, the light intensity of 10 units would produce 10 spikes/s in the second-order-neuron. But because of the inhibitory connection from neighbor neurons to the right and left, the output is reduced to 6 spikes/s. The same is true for cells far to the left of the border but the magnitude of the excitation is less and correspondingly the magnitude of the inhibition is less. The key processing occurs at the border or edge. Note that the neuron just to the right of the border receives the same inhibition from the neuron to its right but receives less inhibition from the neuron to its left on the other side of the border. Therefore, it receives more net excitation and it has an output of 7 spikes/s rather than the 6 spikes/s of its neighbor to the right. Now look at the neuron to the left of the border. It receives weak inhibition from its neighbor to the left, but stronger inhibition from its neighbor to the right on the other side of the border. Therefore, it receives less net excitation and it has an output of 2 spikes/s rather than the 3 spikes/s of its neighbor to the left. So as a result of lateral inhibition the information transmitted to the nervous system and the gradient that is perceived would be a version of the original one with an enhanced border or edge (Figure 10B)!

Mach bands. The simple retinal circuit with lateral inhibition can account the phenomenon of edge enhancement. It can also account for a visual illusion known as Mach bands. Figure 11 illustrates a gradient of light and dark vertical bands and across these bands is a thin horizontal line. It appears as though the horizontal line has an uneven distribution of intensities with it being darker in the region of the light vertical gradients and lighter in the region of the dark vertical gradients. This is a visual illusion. The illusion can be revealed by placing a mask over the vertical gradient. (Press “Play” to add the mask.) Now you can see that the horizontal bar has uniform intensity. It is perceived darker in some regions because the cells in the retina that respond to the darker region of the horizontal bar are strongly inhibited by the cells responding to the bright region of the vertical band. In contrast, the bar is perceived brighter in some regions because the cells in the retina that respond to the lighter region of the horizontal bar are only weakly inhibited by the cells responding to the dark region of the vertical band.

|

Figure 11 |

Feedback/recurrent inhibition

Feedback inhibition in microcircuits. Feedback inhibition plays a general role in damping excitation through a neural circuit. A classic example is the Renshaw cell in the spinal cord. The axon of a spinal motor neuron branches. One branch innervates muscle as described earlier (e.g., Figure 7) and the other branch makes an excitatory synaptic connection with an interneuron called the Renshaw cell. The interneuron in turn inhibits the motor neuron, thereby closing the loop. Another example of feedback inhibition is found in the hippocampus. CA3-type pyramidal cells make excitatory connections to basket cells and the basket cells feedback to inhibit the CA3 cells. The term recurrent inhibition is applied to simple feedback inhibition circuits such as the Renshaw circuit in the spinal cord and the basket cell circuit in the hippocampus.

Feedback inhibition in nanocircuits. Feedback inhibition is not only prevalent in many neuronal circuits; it is also prevalent in biochemical circuits. Here it can serve as a substrate for generating oscillations. These can cover multiple time scales from seconds to days depending on the molecular components of the circuit.

|

|

Figure 12. From Byrne, Canavier, Lechner, Clark and Baxter, 1996. |

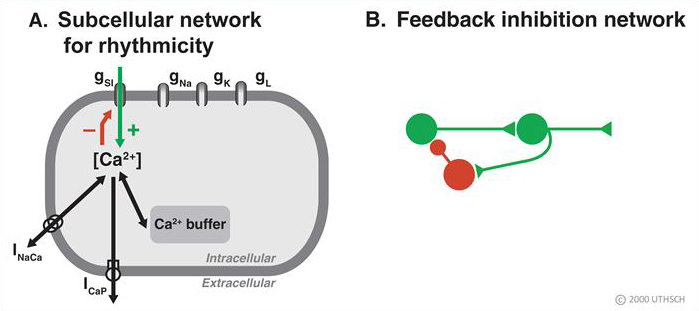

- Endogenous bursting behavior in neurons. The idealized neuron described earlier in the Chapter was silent in the absence of stimulation (e.g., Figure 3). However, some neurons fire action potentials in the absence of stimulation, and, in some cases, the firing patterns can exhibit a bursting pattern in which successive high frequency spike activity is followed by quiescent periods. Such neuronal properties could be important for generating rhythmic behaviors such as respiration. Figure 12 is an example of a recording from an invertebrate neuron that has an endogenous bursting rhythm. This particular neuron is called the parabolic burster because the nature of the inter-spike intervals being long in the beginning and end of the burst cycle, but very brief in the middle of the cycle. The cell fires a burst of action potentials and then becomes silent, but soon another burst occurs and this process continues indefinitely about every ten to fifteen seconds. The bursting occurs even if the neuron is surgically removed from the ganglion and placed in culture so there are no synaptic connections from other neurons. So a neuronal network is not necessary for this rhythm – it is endogenous. But, it does involve a nanocircuit within the cell. Figure 13A illustrates a very simplified version of that network that emphasizes the key principle of operation. Critical for this network function is a channel in the membrane (labeled gSI), which is permeable to Ca2+. Because the concentration of Ca2+ is relatively high in the extracellular medium and low inside the cell, Ca2+ will move down its concentration gradient and in so doing will depolarize the cell. Eventually, the depolarization reaches threshold and the cell begins to fire. The firing leads to additional influx of Ca2+ (green arrow) and accumulation of Ca2+ within the cell. The key step is that the accumulation of Ca2+ inhibits (red arrow) the further influx of calcium and terminates the burst. The burst remains terminated as long as the levels of intracellular Ca2+ remain elevated. But the levels of Ca2+ do not remain elevated for long. They are reduced by intracellular buffers and removed from the cell by pumps (INaCa and ICaP). As intracellular levels of Ca2+ are reduced, the inhibition of the channel is removed (disinhibition) and the neuron begins to depolarize again and another burst is initiated. Essentially, what is seen here at the nanonetwork level is a recapitulation of a feedback inhibitory network (Figure 13B, hover over the area to the right of Fig. 13A). An initial excitatory process leads to activation of an inhibitory process, which feeds back to shut off the excitatory process. In such a network, oscillations will result if the excitatory drive is continuous, but the inhibitory process wanes in its effectiveness.

Figure 13

-

Figure 14. Modified from Hastings et al., Nature Rev. Neurosci., 2003.

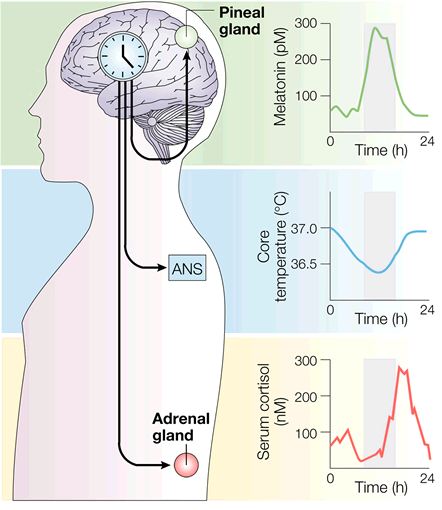

Circadian rhythms. A second example of feedback inhibition is the nanocircuit for the gene regulation that underlies circadian rhythms. The vertebrate circadian rhythm is due to the operation of a group of neurons in a region of the brain called the suprachiasmatic nucleus, which is located just above the optic nerve. Those neurons have profound effects on both hormonal release such as melatonin, cortisol, as well as on autonomic functions such as body temperature (Figure 14). Despite the profound effects of this oscillator, its operation reduces to a very simple circuit, and indeed not a neural circuit, but rather another nanocircuit. The basic mechanism seems to be conserved across all animal species including man. Figure 15 is a simplified schematic diagram of the basic components. Several genes are involved but the core mechanism involves a gene called per, where per is for period. This gene was first identified from the fruit fly Drosophila but is also present in vertebrates. The per gene leads to the production of per messenger RNA. The per mRNA leaves the nucleus and enters the cytoplasm where it leads to the synthesis of PER protein. PER diffuses or is transported back into the nucleus where it represses the further transcription of the per gene. Conceptually, this system is very similar to that of the mechanism for the bursting neuron discussed above. The gene is activated, it produces the message and the protein, and the protein feedbacks to inhibit the gene expression. But how does the cycle repeat itself? The key mechanism is degradation of the PER. PER protein is degraded and it is degraded over a 24 hour period. So as the PER protein is degraded the inhibition or repression is removed (disinhibition) allowing this gene to start making messenger RNA and protein all over again. So once this cycle begins, it is repeated over and over again at a 24 hour period. This is the core mechanism underlying circadian rhythms and the powerful affects that they have on a number of different physiological systems. Basically, our circadian rhythms all start with a molecular feedback inhibition network.

|

Figure 15 |

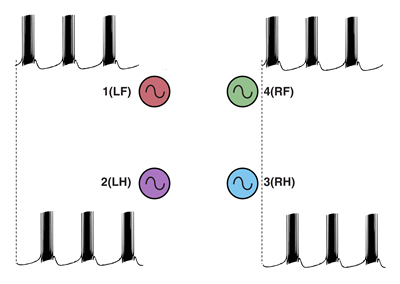

Feedback inhibition in ring circuits. Recurrent inhibition can, at least in principle, explain the generation of complex motor patters, an example of which is quadrupedal locomotion. Quadrupedal location is interesting because quadrupeds are capable of not only moving their four legs, but generating different types of cycles of activity called gaits. Figure 16 illustrates four gaits. The first panel is a walk (hover over the panel). The sequence begins with extension of the left front limb. This is followed by extensions of the right hind limb, the right front limb and the left hind limb. In the trot (second panel of Figure 16) (hover over the panel), the left front and right hind limbs are in phase with each other and 180 degrees out of phase with the right front and left hind limbs. In the bound (third panel) (hover over the panel) the left front and right front limbs are in phase, but 180 degrees out of phase with the left hind and rear hind limbs. The gallop (fourth panel) (hover over the panel) is a variant of the bound in which there is a slight phase difference between the right and left front limbs and rear limbs.

|

|||||||||

|

Figure 16 |

How does the nervous system generate these gaits? And, are separate neuronal circuits necessary for each one? Unfortunately, neuroscientists do not know the answers to these questions, but it is instructive to examine some possibilities. This is an approach in a field of neuroscience called Computational and Theoretical Neuroscience. One way to generate a gait is illustrated in Figure 17. Take four individual neurons each with endogenous bursting activity like the one illustrated previously in Figure 12, and assign activity in each one of these neurons to the control of a specific limb. The neurons could be “started” so that they have the appropriate phase relationships to generate a gait such as the bound illustrated in Figure 16. The difficulty would be in starting the neurons at exactly the precise time. Another problem would be slight “drifts” in the oscillatory periods of the four independent neurons that over time would cause the pattern to become uncoordinated (Figure 18). This dog is not going to win any races and it is probably not going to be able to walk.

|

||

|

Figure 17 |

Figure 18 |

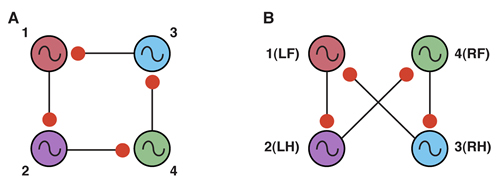

So clearly the neurons need to be coupled. One way of doing this is to use a recurrent inhibition circuit consisting of four coupled neurons to form a so called “ring” circuit where each neuron in the circuit has endogenous bursting activity and each neuron is coupled to the next with an inhibitory synaptic connection (Figure 19A).

|

|

Figure 19. Modified from Canavier, Butera, Dror, Baxter, Clark and Byrne, 1997. |

To obtain the correct phase relationships for gaits, rather than assigning Neuron 3 to be the right front, it is assigned to control the right hind limb, and Neuron 4 is assigned to control the right front limb (a simple twist of the circuit) (Figure 19B). When implemented with a computer simulation, this single circuit is capable of generating quadrupedal gates. Moreover, the same circuit, with just small changes in the properties of the individual neurons, can generate each of the four gaits illustrated in Figure 17 (Figure 20).

|

Walk |

Trot |

Bound |

Gallop |

|||

|

Figure 20 |

||||||

This result indicates an important point about neural networks. In order to understand them it is necessary to understand not just the topology of the network, but also the nature of the connections between the neurons (whether they are excitatory or inhibitory), as well as the properties of the individual nodes (i.e., the neurons). Also, this simulation illustrates a phenomenon called dynamic reconfiguration. It is not necessary to have four different networks to generate these four different gaits – it can all be done with a single circuit. The actual circuit generating quadrapedal gaits is more complex than that of Fig. 19. The interested reader is referred to a recent review by Ole Keihn (See Further Reading)

Feedback/recurrent excitation

Recurrent excitation in nanocircuits and microcircuits appears to be critical for learning and memory processes. Learning involves changes in the biophysical properties of neurons and changes in synaptic strength. Accumulating evidence indicates positive feedback within biochemical cascades and gene networks is an important component for the induction and maintenance of these changes. Moreover, recurrent excitation is found in at least some microcircuits involved in memory processes. A prime example is found within the CA3 region of the hippocampus.

|

Figure 21. Modified from Byrne and Roberts, 2009. |

Figure 21 illustrates key features of the CA3 recurrent excitatory circuit. Six different hippocampal pyramidal neurons are labeled as U, V, W, X, Y, and Z. Each one of these neurons receives a synaptic connection from the presynaptic neurons labeled a, b, c, d, e, and f. These presynaptic neurons can be either active or inactive with a 0 and black color representing an inactive neuron and a 1 and a green color indicating an active one. An important aspect of this circuitry is that the synaptic connections from the input pathway are sufficiently strong to activate (fire) the pyramidal neuron to which they are connected. For example, if neuron a is activated, neuron Z will be activated, which is represented as a 1 on the Output bar. This topology is nothing more than feedforward excitation. The reciprocal excitation makes this circuit special. For example, neuron Z and the other pyramidal neurons have axon collaterals that feedback to connect with themselves. But, they not only make a connection with themselves. Each neuron makes a connection with each of the four other pyramidal neurons in the circuit. So every pyramidal receives convergent information from all the other cells in the network, and, in turn, the output of each pyramidal neuron diverges to make synaptic connections with all the other pyramidal neurons in the circuit. (Therefore, this recurrent excitation motif has embedded within it the convergence and divergence motifs.) The matrix of connectivity consists of 36 elements.

In order for this network to learn anything, a synaptic plasticity learning rule needs to be embedded into the circuit. One that is widely accepted is known as the Hebb Learning Rule. Essentially, it states that a synapse will change its strength if that synapse is active (i.e., releases transmitter) and, at the same time, the postsynaptic cell is active. The combination of this learning rule and the recurrent excitatory circuit leads to some interesting emergent properties. For example, if neuron Z is activated by input a, the strength of its connection to itself (synapse 1) will change as indicated by the green colored synapse on Figure 21 (hover over the illustration). However, synapse 1 will not be the only synapse that will be strengthened. For example, synapse 13 will also be strengthened because neuron Z was active at the same time neuron X was activated by input c. In contrast, synapse 7 is not strengthened because neuron Y was not active at the same time as neuron Z. The net effect of this convergence and divergence and the learning rule is that an initial activity input pattern will be stored as changes in the elements of the connectivity matrix. This circuit has therefore been called an auto-association network. An important concept here is that the ”memory” is not in any one synapse; it is distributed in the network.

Summary

Considerable progress has been made in understanding how different simple neural networks are involved in information processing and mediating behavior. Feedforward excitation and feedforward inhibition mediate reflex behaviors. Lateral inhibition is important for edge enhancement. Recurrent excitation is an important mechanism for memory. Recurrent inhibition can be important for generating locomotor behavior. Convergence and divergence are embedded in these microcircuits. The same kinds of network motifs are recapitulated in biochemical and gene networks.

The next level of understanding is at the level of the neuronal networks that mediate more complex, so called higher-order functions of the brain. Their understanding is becoming possible through the use of electrophysiological and optical recording techniques, and modern imaging techniques such as functional magnetic resonance imaging (fMRI) and diffusion tensor imaging (DTI). fMRI allows investigators to identify areas of the brain that are engaged in cognitive tasks, whereas DTI allows visualization of pathways linking one brain region to another. Figure 22 (courtesy of Tim Ellmore, Ph.D., Dept. of Neurosurgery, The University of Texas Medical School at Houston) is a side view of the human brain showing the pathways interconnecting cortical areas revealed using DTI.

|

Figure 22. Courtesy of Tim Ellmore, Ph.D. |

Object recognition is an example where progress is being made in understanding macrocircuits. As illustrated in Figure 23, processing of visual information starts in the retina and then engages multiple cortical regions such as the occipital cortex and the temporal cortex. Within this macrocircuit are modules that extract higher-order information. Each module presumably involves hundreds, if not thousands of individual microcircuits. The challenge for the future is to determine how these modules work and how they interact with other modules. Although feedforward connections are present, feedback connections and lateral connections are widespread. The challenge is enormous but perhaps achievement of the goal will be facilitated by taking advantage of what has been learned about the principles of nanocircuits and microcircuits. To understand the macrocircuits it will be necessary to know more than the topology of the network interconnections. It will be necessary to know how each module functions and about the dynamics of the inter module connections.

|

Figure 23. From Felleman and Van Essen, 1991. |